What Is IT Discovery?

IT discovery is the process of automatically identifying and cataloging all components in a digital environment, such as hardware, software, network devices, and their configurations. It provides organizations with a real-time map of their IT assets, giving insights for efficient management, troubleshooting, and security.

Discovery is crucial to IT operations, helping teams know what exists within their environment, how it is connected, and where vulnerabilities may lie. Organizations rely on IT discovery to prevent service disruptions, ensure compliance, and support decision-making.

Table of Contents

ToggleThe process scales from small networks to complex, geographically distributed infrastructures, including on-premises and cloud assets. IT discovery forms the baseline for further operations like monitoring, patch management, and security analysis.

This is part of a series of articles about application discovery

Core Functions of IT Discovery

1. Asset Inventory and Classification

Asset inventory and classification form the backbone of IT discovery, enabling organizations to establish a catalogue of every device, system, and component connected to their network. The inventory process identifies all hardware—from servers and storage arrays to workstations, network devices, and IoT equipment. Once discovered, each asset is classified by type, function, location, and other relevant metadata, simplifying management and reporting.

Accurate inventory and consistent classification make it possible to enforce policies, administer software updates, manage warranties, and stay on top of lifecycle management. Maintaining a clear, up-to-date asset inventory helps reduce operational risks by ensuring that nothing remains undocumented or unmanaged.

2. Software and Application Discovery

Software and application discovery identifies all installed software, running processes, versions, and license usages on each asset. This process is critical for maintaining proper software compliance, identifying unauthorized applications, and managing security vulnerabilities that may arise from outdated or unapproved software.

Discovery tools scan endpoints, servers, and virtual environments to build an accurate inventory of the software in use. Application discovery supports patch management, vulnerability assessment, and asset optimization. By knowing which software is running and where, organizations can reduce their attack surface and better control software licensing costs.

3. Network and Endpoint Mapping

Network and endpoint mapping visualizes the structure of IT environments by discovering how devices are connected and how they communicate. This function uncovers routers, switches, firewalls, and computes the paths data travels across the network. Accurate topology maps enable administrators to understand segmentation, identify misconfigurations, and troubleshoot connectivity or performance issues.

Endpoint mapping further identifies user devices, virtual machines, and unmanaged or rogue hardware that may connect to the network. These maps are valuable for enforcing network access controls, defining security zones, and detecting lateral movement during a breach.

4. Configuration and Infrastructure Visibility

Configuration and infrastructure visibility involves gathering detailed information about the current state of infrastructure components, such as device settings, firmware versions, system parameters, and service configurations. This function of IT discovery ensures that organizations have a documented baseline of how critical systems are configured at any given time.

Maintaining visibility into these configurations is vital for compliance audits, troubleshooting, and change management. It also supports detecting configuration drift, where systems deviate from known, secure settings, potentially introducing vulnerabilities and operational risks.

5. Dependency and Service Relationship Mapping

Dependency and service relationship mapping reveals how applications, services, and infrastructure components interconnect and depend on one another. IT discovery tools analyze communication patterns, service calls, and data flows to model these dependencies, giving IT teams a clear view into the intricate web of relationships in a modern IT environment.

Understanding these dependencies is crucial for change management, incident response, and impact analysis. For example, if a server fails, service relationship maps help identify all affected downstream services and applications.

6. Data and Storage Discovery

Data and storage discovery focuses on identifying where data resides across the organization’s infrastructure, including on-premises storage arrays, cloud buckets, databases, and endpoint devices. Discovery tools map data assets, storage capacity, utilization patterns, and access permissions to uncover hidden data silos or potential compliance issues.

By maintaining an up-to-date perspective on data locations and storage configurations, organizations can manage risks related to data loss, leakage, and non-compliance with regulations such as GDPR or HIPAA. Data discovery also aids in capacity planning, helps optimize storage resources, and supports data retention strategies.

IT Discovery vs. Related Concepts

IT Discovery vs. Monitoring

While IT discovery focuses on identifying and cataloging the components within an IT environment, monitoring is concerned with tracking the performance, health, and activity of those components in real time. IT discovery establishes a static inventory of assets and their relationships, whereas monitoring continuously collects data to measure system uptime, response times, and resource utilization.

Monitoring helps detect issues as they occur, providing insights into operational performance, while IT discovery ensures that the underlying infrastructure and services are correctly identified, classified, and mapped. The two processes complement each other—discovery provides the initial map, and monitoring ensures that any changes or issues with those components are promptly detected and addressed.

IT Discovery vs. Service Mapping

Service mapping builds on the information gathered during IT discovery by focusing on how IT services are delivered to end-users. While IT discovery identifies the infrastructure components, including hardware and software, service mapping takes this data and models the relationships between these components as they support business services.

IT discovery provides the foundational knowledge of what exists in the IT environment, while service mapping provides a dynamic, detailed map of service dependencies, helping IT teams understand the end-to-end delivery of services and troubleshoot incidents based on service impacts.

IT Discovery vs. Asset Management

Asset management and IT discovery share the common goal of tracking and managing IT resources, but they differ in scope and application. IT discovery is a process that identifies and categorizes all components of an IT environment, providing a real-time view of what exists within it. Asset management is more focused on the lifecycle and governance of assets, including procurement, maintenance, and disposal.

While asset management tools may rely on data collected from discovery, they go beyond simple identification by integrating with financial and operational systems to track asset depreciation, ownership, and status.

Lanir specializes in founding new tech companies for Enterprise Software: Assemble and nurture a great team, Early stage funding to growth late stage, One design partner to hundreds of enterprise customers, MVP to Enterprise grade product, Low level kernel engineering to AI/ML and BigData, One advisory board to a long list of shareholders and board members of the worlds largest VCs

Tips from the Expert

In my experience, here are tips that can help you get more value and accuracy from IT discovery initiatives:

- Start with a network topology baseline scan: Perform an initial topology discovery to map out all known and unknown devices. This creates a visual reference for detecting rogue devices or misconfigured assets later.

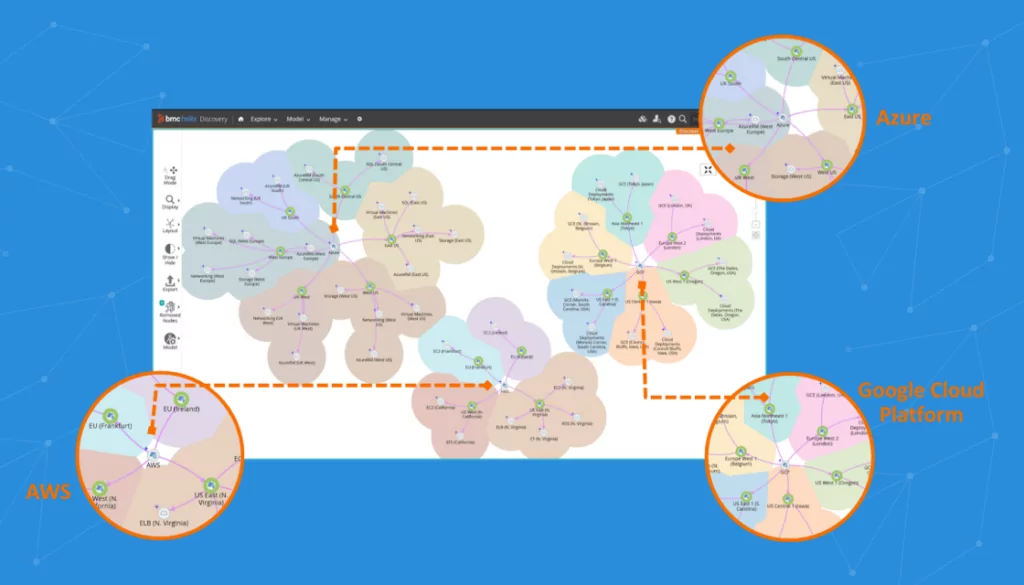

- Leverage API-based discovery for cloud-native assets: Traditional scanning misses ephemeral cloud resources. Use API integrations with AWS, Azure, and GCP to capture dynamic workloads, serverless functions, and containers.

- Combine discovery with vulnerability scanning: Enrich the asset inventory by correlating discovered configurations with known vulnerabilities to prioritize patching and remediation efforts.

- Deploy passive discovery sensors in sensitive networks: For OT, IoT, and air-gapped environments, passive sniffers avoid disruptions while still detecting unauthorized devices or traffic anomalies.

- Enable agent-based discovery for deep OS insights: Where possible, use lightweight agents on critical servers to capture granular configuration data, running services, and patch levels that agentless methods often miss.

Challenges in IT Discovery

Here are some of the main factors that can make it more challenging to identify IT assets.

Complex Environments

Modern IT environments are often highly heterogeneous, spanning on-premises infrastructure, multiple public and private clouds, virtualized resources, and increasingly, edge devices and IoT. This complexity makes IT discovery challenging, as conventional scanning techniques may not reach across all network segments, protocols, and platforms. Hybrid environments require tools capable of integrating data from cloud APIs, virtualization layers, containers, and legacy systems.

Data Overload

The scale and dynamic nature of enterprise environments mean that IT discovery processes may generate massive volumes of data. If not managed, this data overload can overwhelm IT teams, leading to missed insights or delayed responses. Discovery tools must be equipped with filtering, correlation, and prioritization capabilities, allowing teams to focus on relevant changes, risks, or anomalies without being buried under unnecessary detail.

Security Concerns

Running IT discovery can introduce security risks if performed without sufficient safeguards. Poorly configured scans may open vulnerabilities, inadvertently expose sensitive data, or consume excess network resources, potentially impacting production services. Discovery tools themselves can become targets if they have privileged access or are not properly secured.

Types of IT Discovery Tools and Techniques

Agent-Based vs. Agentless Discovery

Agent-based discovery involves installing lightweight agents on each device to collect detailed information directly from the source. This approach enables access to low-level system data, granular change detection, and can function reliably in segmented or isolated network environments. However, deploying and maintaining agents adds operational overhead, may require frequent updates, and introduces potential compatibility challenges.

Agentless discovery leverages protocols such as SNMP, WMI, or APIs to remotely scan and inventory systems without deploying software to each endpoint. This method simplifies deployment and reduces maintenance, but might have limited visibility into certain system attributes or experience challenges with firewalls or credential management. Organizations often blend agent-based and agentless techniques to maximize coverage.

Passive vs. Active Scanning Techniques

Passive scanning observes network traffic in real-time to detect devices, software, and services without generating additional load or risk of disruption. This approach is valuable for identifying rogue assets or changes as they occur, but may miss inactive or low-traffic components. Passive techniques are less intrusive, making them well-suited for sensitive or high-availability environments.

Active scanning involves systematically probing the network or endpoints to solicit responses and gather comprehensive information about the environment. While this provides higher accuracy and completeness, active scanning can consume bandwidth, trigger security alarms, or even impact systems if performed too aggressively. Organizations often use a combination of both methods—passive for always-on, real-time surveillance and active for scheduled, in-depth analysis.

Real-Time vs. Scheduled Discovery

Real-time discovery maintains a continual watch over the environment, immediately detecting and recording new assets or changes to existing ones. This continuous approach is vital for fast-moving, dynamic networks where assets are frequently provisioned or decommissioned. Real-time discovery supports rapid response, compliance, and operational agility but may require more resources and sophisticated data processing.

Scheduled discovery operates at defined intervals—hourly, daily, or weekly—batching scans to reduce impact on network and system performance. While it provides a regular inventory snapshot, there may be a lag between changes and their detection, which can be a drawback in highly dynamic environments. Pairing scheduled scans with real-time techniques offers both efficiency and timeliness, allowing organizations to tailor strategies to their needs.

Best Practices for Effective IT Discovery

Here are some of the ways that organizations can ensure a reliable discovery strategy for their IT assets.

1. Maintain an Always-On Discovery Process

Implementing an always-on discovery process ensures that asset inventory remains continuously up-to-date, minimizing the risk of unmanaged devices or blind spots. This practice is important as modern environments are increasingly dynamic, with endpoints, instances, and services frequently spinning up or down.

Continuous discovery provides organizations with timely intelligence, strengthens operational control, and enables proactive detection of unauthorized assets. Always-on discovery should leverage automation, alerting, and integration with other IT management systems for maximum effectiveness.

Organizations benefit from real-time visibility, faster incident response, and improved compliance posture. However, it is crucial to design these processes with scalability and resource management in mind to avoid overwhelming networks or analysis capabilities.

2. Classify and Tag Assets Consistently

Consistent classification and tagging of assets enable efficient filtering, reporting, and policy enforcement across the IT environment. Without standardized asset metadata, it becomes difficult to prioritize, secure, or manage components effectively. Classification schemes should include device type, business function, environment (e.g., production, development), ownership, and compliance requirements.

Automated discovery tools can assist by applying tags based on predefined criteria and templates, but periodic manual review is necessary to correct errors and address exceptions. Consistent asset classification lays the groundwork for effective change management, risk assessment, and alignment with business priorities.

3. Integrate Discovery with Incident and Change Management

Integrating IT discovery with incident and change management processes simplifies root cause analysis and reduces mean time to resolution (MTTR). When discovery feeds real-time asset status and change data into incident response workflows, IT teams gain the context needed to quickly identify affected services and prioritize remediation.

Integration also ensures that configuration changes are tracked and aligned with policy. This synergy supports proactive risk reduction by highlighting unauthorized or unexpected changes as soon as they occur. Automation enables creation of incident tickets and change requests directly from discovery events, eliminating manual steps.

4. Regularly Audit Discovery Coverage and Accuracy

Regular audits of discovery coverage and data accuracy are essential to maintain trust in asset inventories. As environments evolve, devices are added or removed, and cloud instances change, it is easy for inventories to drift from reality.

Scheduled audits—comparing discovery output to actual infrastructure—allow organizations to detect gaps, reconcile discrepancies, and rectify errors promptly. Auditing should include validation of asset classification, configuration details, and detection of orphaned or ghost records. Leveraging reporting and analytics capabilities of discovery tools can simplify this process, highlighting anomalies for manual review.

5. Align Discovery Objectives with Business Outcomes

IT discovery initiatives should be guided by clear business objectives, not simply technical completeness. Aligning discovery with organizational goals—such as regulatory compliance, cyber resilience, cost optimization, or digital transformation—ensures that discovery efforts deliver measurable value.

Collaborating with stakeholders establishes priorities, success criteria, and the scope of discovery campaigns. Objective alignment ensures that critical assets and processes receive appropriate discovery focus, while less relevant components do not consume undue resources.

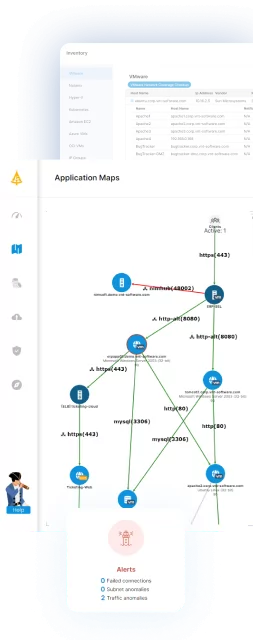

Real Time IT Discovery with Faddom

For IT teams aiming to maintain an accurate view of complex, hybrid environments, Faddom offers a discovery platform tailored to address these challenges. It continuously maps all assets, configurations, and dependencies across on-premises, cloud, and hybrid infrastructure, providing the context necessary for precise inventory management, smarter change management, and quicker incident response.

Faddom automatically classifies assets, tracks configuration changes, and visualizes application relationships without any manual effort. It supports continuous discovery, integrates seamlessly with IT Service Management (ITSM) processes, and strengthens your IT documentation by identifying shadow IT, storage sprawl, and undocumented systems that may often be overlooked.

If your objective is to enhance visibility, reduce operational risk, and ensure that your IT inventory accurately reflects the current state in real time, Faddom is the platform designed for you.

Schedule a demo to learn how Faddom can help you transform discovery into a strategic advantage!