Table of Contents

ToggleWhat is an Application Assessment?

Application assessments offer a complete picture of software, from creation right through to implementation and deployment within the production environment. A comprehensive review starts with assessing how well an application is constructed. An application’s components—including third-party libraries, modules, dependencies, and configuration—all significantly impact its overall quality.

Poorly constructed applications can suffer from degraded performance and stability issues. They may also introduce security issues due to vulnerabilities or misconfigurations. Inefficiencies in design may require higher maintenance costs, reflecting the need for manual processes or periodic code fixes to remain operational. In the worst cases, poorly designed applications that provide services throughout the organization may cause outages in related systems that rely on the application, decreasing uptime and performance metrics.

When discussing application assessments, one might assume that only the code needs to be reviewed. However, a complete application assessment analyzes a combination of the code itself, how the code is implemented, how it is deployed, and the way in which it is integrated into its environment. Comprehensive assessments must also consider the hardware infrastructure that supports the software. Only when all this information is considered as a whole can an application be evaluated holistically.

Main Use Cases for Application Assessment

Application assessments are essential for ensuring the quality, security, and performance of software applications. Here are some main use cases for conducting application assessments:

- Quality assurance: Application assessments help ensure that the software meets the desired quality standards and works as intended, minimizing defects, errors, and usability issues that could affect the end-user experience.

- Security: Security assessments aim to identify vulnerabilities and potential threats within the application, helping developers to address and fix these issues before they can be exploited by malicious actors. This is particularly important for applications handling sensitive data or those exposed to the public internet.

- Performance optimization: By assessing the performance of an application under various workloads and conditions, developers can identify bottlenecks and optimize resource usage, ensuring that the software can handle the expected user traffic and perform efficiently.

- Compatibility: Application assessments help ensure that the software is compatible with different devices, operating systems, and browsers, providing a consistent user experience across various platforms.

- Regulatory compliance: For applications that must adhere to specific industry regulations or standards, assessments can help ensure that the software is compliant and meets the necessary requirements.

- Risk mitigation: Application assessments can help organizations identify and mitigate potential risks associated with software development, such as project delays, budget overruns, and reputational damage due to poor application quality or security breaches.

- Vendor evaluation: When outsourcing software development or purchasing third-party applications, organizations can use application assessments to evaluate the quality, security, and performance of the vendor’s product, ensuring it meets their requirements and expectations.

How Does an Application Assessment Work?

Application assessment typically involves several aspects, such as:

- Functionality assessment: This aspect involves examining whether the application’s features and functions align with the business requirements and user needs. It checks if the application performs the tasks it was designed for and if it does so efficiently and effectively.

- Usability assessment: Evaluating the application’s user interface, user experience, and overall ease of use. This assessment aims to ensure that the application is intuitive, user-friendly, and accessible to its target audience.

- Security assessment: Analyzing the application for potential security vulnerabilities, such as data breaches, unauthorized access, or other security risks. This process often includes penetration testing, static and dynamic code analysis, and a review of security best practices and standards.

- Performance assessment: Testing the application’s performance under various conditions, such as high user loads, limited resources, or different network environments. Performance assessment helps identify bottlenecks, latency issues, and other factors that could impact the application’s responsiveness and reliability.

- Compatibility assessment: Ensuring that the application works well across different devices, operating systems, browsers, and platforms. This assessment identifies any compatibility issues that may prevent the application from functioning correctly in different environments.

- Code quality assessment: Reviewing the application’s source code for adherence to best practices, maintainability, and overall quality. This process involves checking for code consistency, proper documentation, and efficient use of resources.

The Application Assessment Process

The application assessment process typically consists of several steps aimed at evaluating an application’s design, functionality, security, performance, and overall quality. While the exact process may vary depending on the organization’s goals and the specific application, the following steps provide a general outline of the application assessment process:

1. Define objectives and scope

Begin by establishing the goals of the assessment and determining which aspects of the application will be evaluated. This may include functionality, usability, security, performance, compatibility, or code quality.

2. Gather information

Collect relevant information about the application, such as its architecture, design documentation, source code, and any existing test results. This information will be used to guide the assessment process and inform the selection of appropriate testing tools and techniques.

3. Select tools and techniques

Choose the appropriate tools and techniques for assessing the application based on its complexity, technology stack, and the specific objectives of the assessment. This may include automated testing tools, static and dynamic code analysis, penetration testing, or manual code reviews.

4. Perform the assessment

Conduct the application assessment using the selected tools and techniques. This may involve testing the application’s functionality, evaluating its user experience, analyzing its source code, or assessing its performance under various conditions.

5. Analyze the results

Review the results of the assessment to identify any issues, vulnerabilities, or areas for improvement. This may include examining test results, code analysis reports, or penetration testing findings.

6. Prioritize and document findings: Prioritize the identified issues based on their severity, potential impact, and the effort required to address them. Document the findings, along with any recommendations for improvement, in a clear and concise manner.

7. Implement remediation

Work with the development team to address the identified issues and implement the recommended improvements. This may involve fixing security vulnerabilities, optimizing performance, or enhancing the application’s usability.

9. Verify and re-assess

Once the recommended improvements have been implemented, verify that the issues have been resolved and, if necessary, re-assess the application to ensure that no new issues have been introduced.

10. Continuous improvement

Continuously monitor the application for new issues or changes in the threat landscape, and update the assessment process as needed. Implement a feedback loop to apply lessons learned from the assessment to future projects and improve the overall application development process.

Best Practices for Application Assessment

Defining Scope and Frequency

An application assessment may be a one-time event with a fixed scope when determining a cloud migration. The outcome of this type of assessment will be a recommendation on the type of cloud transformation that should take place, or a decision to have the application remain on premise.

When assessing an application in production, the assessment frequency may be multiple times a year. Once annually, an assessment with a broader scope may be conducted, whereas the remainder of the follow-up tests (after the first application assessment) will be more tightly focused on areas of concern that were initially discovered. The retesting process may focus on determining the current effectiveness of, or progress made in, those areas of initial concern.

Identifying Key Stakeholders

Assessment of software cannot be conducted in a bubble. Like software development, it requires cross-team collaboration and information sharing in order to understand the scope and nuances of a project. Key stakeholders may include application owners, users, operational teams, and project champions and managers.

Application Owners

Application owners have intimate knowledge of the application, and may include programmers, architects, and other technical contributors.

Users

This includes representatives of product users who may be affected by the testing. Users can provide information on common workflows and high or critical usage times—essential information for reducing the impact on the workforce during testing.

Operational Teams

Cloud and on-premises teams that support the operations of the application constitute this group. They can help to identify possible impacts on performance or operations caused by testing, and provide important information about design versus implementation. Sometimes the design differs from the implementation due to technical, labor, or time constraints which force workarounds that may not be reflected in the design.

Project Champion and Project Manager

The project champion is a vital stakeholder, since they act as the project sponsor for the organization. The project champion must be someone with the capacity to rally resources to the assessment, and the clout to drive the project as a whole. Without their support, the application assessment can run into bottlenecks or resource blockers that prevent its timely completion or stop it dead in its tracks. Since this role is complex and multifaceted, the champion is usually backed up by a project manager who helps to establish scope, objectives, and priorities. The project manager helps to coordinate resource management, ensuring that the right teams and individuals are available for the project when needed, contributing to timely completion of the project.

Managing Expectations

From the outset, it is crucial to communicate to individuals involved in creating or maintaining the application that areas requiring remediation will likely be discovered. These discoveries are not an assessment of the quality of the team, code, or implementation, but instead a neutral evaluation. Sometimes groups find undergoing evaluations of their work to be emotionally challenging, especially if grading is included in the process. In this instance, it can help to reinforce that the process does not place blame, but rather determines if there are areas that require optimization. This is an important management step in order to reduce any internal resistance to the assessment process.

It is also crucial to prepare critical stakeholders for action items stemming from the assessment. Rarely is nothing identified as an area for improvement. Individual findings will need to be evaluated in order to determine if appropriate business cases exist for resolving them. Those with a reasonable business case will be prioritized, and timelines created to set goals and expectations for their resolution.

Application Assessment Deliverables

At the end of an engagement, it is essential to translate the collective findings into shareable deliverables. Deliverables that could be created based on the data discovered include a findings meeting, assessment report, action plan, and dashboard.

Findings Meeting

In these meetings, one of the assessors will walk key stakeholders through the assessment results, explaining findings and recommendations. Attendees will discuss the methodologies and outcomes of the review, and develop a next-steps plan with operational and implementation teams to determine and prioritize the next stage(s) of the process.

Assessment Report

The assessment report contains summarized information, highlighting key findings and recommendations.

Action Plan

Any critical findings from the application assessment will be included in the plan, along with recommendations for remediation.

Dashboard

The dashboard contains in-depth data gathered from testing. As it may include sensitive information, access should be limited to internal users who require a deeper understanding of the data. Information of this nature is helpful to engineers and technicians looking to discover root causes when troubleshooting or remediating findings.

Empowering IT Assessments with Faddom

Conducting an IT application assessment requires support across the organization, and expertise in all areas from application development to operation. Sometimes, in the course of assessment, documentation may be found to be outdated or inaccurate, which can slow or halt the process, or create incorrect findings if not noticed and remediated.

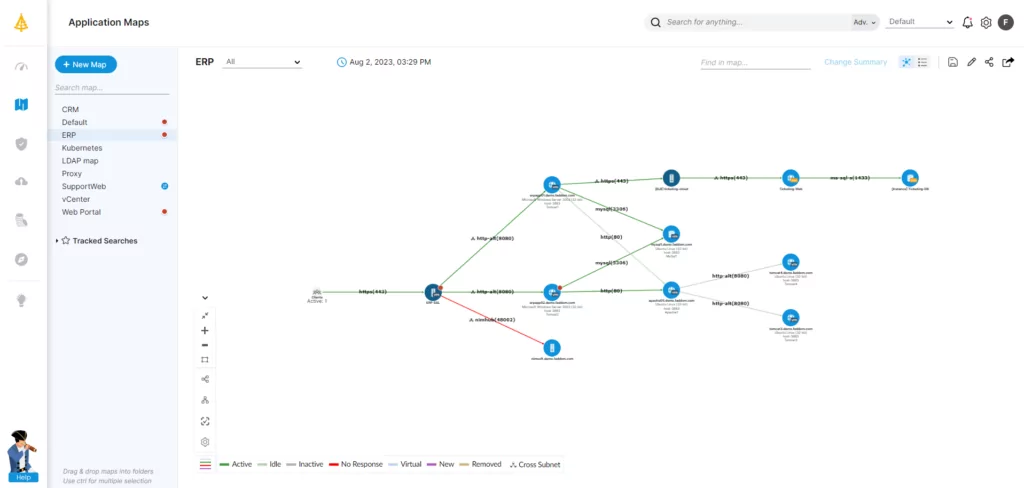

Faddom’s application dependency mapping helps businesses to overcome this challenge by rapidly gathering information about underlying application dependencies. From mapping out the hardware infrastructure to identifying required libraries and custom codebases, Faddom produces and validates the information required to ensure that any IT assessment begins with full and accurate information.

Try a free trial of Faddom at the right today and start mapping your application environment in less than an hour.