What Is Data Center Capacity Planning?

Data center capacity planning is the process of evaluating and strategically preparing for a data center’s current and future needs in areas like space, power, cooling, and computing resources to meet workload demands. This involves assessing existing capacity, predicting future growth, and implementing a plan to ensure the IT infrastructure can handle both immediate and long-term requirements without causing performance issues or downtime.

The process requires data collection, analysis, and scenario modeling to identify trends and anticipate future demands. It must consider external factors like regulatory requirements, business expansion, or shifts toward cloud architectures, all of which can impact baseline infrastructure needs.

Key dimensions of data center capacity planning include:

- Space: Planning for physical space for servers, racks, and rows.

- Power: Calculating and planning for electrical capacity to power all equipment.

- Cooling: Ensuring there is enough cooling capacity to prevent overheating.

- Computing resources: Assessing and planning for processing power, memory, and storage.

Network connectivity: Ensuring sufficient bandwidth and port connections for network traffic.

Table of Contents

ToggleWhy Is Data Center Capacity Planning Important?

Effective capacity planning is critical for maintaining service quality, avoiding costly downtime, and aligning IT infrastructure with business growth. Without it, organizations risk performance bottlenecks, inefficient resource use, and unplanned capital expenditures.

- Prevents overprovisioning and underprovisioning: Planning helps avoid spending on unnecessary resources while ensuring critical systems have enough capacity to perform reliably.

- Reduces risk of downtime: Proper forecasting minimizes the chance of hitting resource limits, which can cause service interruptions and degrade user experience.

- Improves cost efficiency: By aligning infrastructure investments with actual needs, organizations can better manage both capital and operational expenses.

- Supports scalability: Capacity planning ensures that the data center can scale in line with business demand, whether through expansion, consolidation, or hybrid cloud integration.

- Enables strategic decision-making: Data-driven insights from capacity planning inform purchasing decisions, technology upgrades, and long-term infrastructure strategy.

- Ensures compliance and resilience: Anticipating capacity needs helps meet regulatory requirements and build redundancy into the system for fault tolerance and disaster recovery.

Core Dimensions of Capacity in Modern Data Centers

Physical Space, Rack Density, and Floor Layout Considerations

Physical space sets a hard limit on data center expansion. Floor area, ceiling height, and structural capacity determine how many racks and devices can be accommodated. As rack density increases, more IT gear fits in the same footprint, but higher power and cooling demands challenge traditional layouts.

Rack spacing, cable management, and floor loading are critical factors. Intelligent floor layout planning anticipates both current and future density targets. Hot/cold aisle orientation, modular containment solutions, and dedicated pathways for power and data optimize both cooling performance and serviceability.

Capacity planning for physical space must also consider non-IT needs such as staging, storage, and pathways for staff. Modeling future scenarios with raised floors, overhead busways, or scalable pod designs helps ensure that expansion can proceed without major disruption or costly redesigns.

Power Delivery Constraints and Optimization Factors

Power delivery is one of the most critical capacity dimensions in data centers, as every IT load requires reliable and sufficient power. Constraints often arise from utility service limits, backup generator size, and the capacity of power distribution units (PDUs).

Additionally, the distribution of power within the data center is shaped by circuit design, redundancy requirements (such as N+1 or 2N architectures), and the presence of uninterruptible power supplies (UPS). In high-density environments, even small inefficiencies can amplify costs and affect scaling potential.

Optimization in power delivery focuses on improving energy efficiency through modern hardware, intelligent PDUs, and power usage effectiveness (PUE) monitoring. Adopting software-defined power management and dynamic load balancing prevents hot spots and balances power draw across the facility.

Cooling System Capacity and Thermal Management Limits

Cooling is as pivotal as power in data center operations. As compute densities rise, traditional air-based cooling methods face limits, prompting a shift toward liquid cooling, in-row cooling, and hot/cold aisle containment. If cooling systems reach their thermal management limits, heat buildup can damage equipment and reduce longevity, causing unexpected downtime or requiring costly retrofits.

During capacity planning, it’s necessary to match cooling output to heat generated, accounting for both peak and average loads. Thermal management also encompasses airflow modeling, humidity control, and sensor-based monitoring. These components help maintain operational ranges and detect anomalies before they escalate.

Capacity planners must continuously track utilization, airflow dynamics, and external environmental factors. Strategies such as predictive maintenance and modular cooling can provide adaptive responses as load profiles evolve, ensuring that temperature thresholds are never breached and operational risk remains low.

Computing Resources: Storage Footprint Growth Patterns and Performance Needs

Storage demand in data centers is driven by data growth, regulatory retention requirements, and increasingly data-intensive applications. Performance requirements such as IOPS, throughput, and latency play a significant role in user experience. Analyzing growth patterns by data type and retention policy helps organizations anticipate when additional capacity or new storage tiers will be needed.

In planning, it’s critical to match storage platforms to application demands, balancing cost, scalability, and resiliency. Tiered storage, data deduplication, and compression optimize usage, while automated storage management allows for dynamic allocation and quick response to demand spikes.

Planners should take into account backup strategies, disaster recovery requirements, and the impact of emerging storage technologies. By modeling these factors, organizations avoid both costly over-provisioning and performance-related incidents.

Networking: Throughput, Latency Tolerances, and Redundancy Paths

Network infrastructure forms the backbone of data center interactions. Sufficient throughput allows servers, storage, and external connections to operate efficiently, while low latency is key for application performance and user experience.

Network constraints surface when port capacity, switch backplane limits, or bandwidth subscriptions cannot support intended traffic patterns. High-density environments or east-west traffic growth from virtualization further stress network resources.

Capacity planning must address both headroom for future traffic growth and resilience to outages or failures. Redundant paths, load-balanced aggregation, and failover mechanisms reduce the impact of hardware faults or maintenance events. Planners must model current usage, forecast peak loads, and understand critical latency thresholds per application type.

Related content: Read our guide to data center migration tools

Lanir specializes in founding new tech companies for Enterprise Software: Assemble and nurture a great team, Early stage funding to growth late stage, One design partner to hundreds of enterprise customers, MVP to Enterprise grade product, Low level kernel engineering to AI/ML and BigData, One advisory board to a long list of shareholders and board members of the worlds largest VCs

Tips from the Expert

In my experience, here are tips that can help you better adapt to the topic of application dependency mapping (ADM):

-

Model for concurrent failure scenarios, not just peak load: Many capacity plans assume optimal operations or single-failure conditions. Stress-test models with multiple simultaneous failures (e.g., cooling failure during a power spike) to assess true resilience and prevent cascading outages.

-

Account for aging infrastructure degradation curves: Power and cooling systems degrade over time, reducing their actual capacity. Factor in aging curves for UPSs, CRAC units, and batteries to avoid overestimating available capacity in medium-to-long-term plans.

-

Factor in software bloat and creeping inefficiencies: Software updates, agent sprawl, and inefficient configuration drift increase compute and storage demand. Include “bloat inflation” margins in compute forecasts to account for gradual resource creep.

-

Use CFD modeling to expose cooling inefficiencies: Computational fluid dynamics (CFD) tools can reveal thermal anomalies and airflow dead zones not apparent from sensor data. Use them to fine-tune floor layout, rack placement, and containment strategies.

-

Model stranded capacity due to imbalanced resources: Imbalances between power, cooling, and space can strand usable capacity. For example, racks with sufficient space but insufficient power. Model capacity interdependencies to ensure no resource becomes a bottleneck.

Top KPIs for Data Center Capacity Planning

Monitoring the right KPIs is essential for effective capacity planning. These metrics provide insight into resource utilization, efficiency, and headroom, enabling data center teams to make informed decisions about scaling, optimizing, or reconfiguring infrastructure. Below are the most critical KPIs to track:

- Power utilization effectiveness (PUE): Measures how efficiently power is used, comparing total facility energy to IT equipment energy. Lower values indicate higher efficiency.

- Rack power density (kW/rack): Indicates the average power draw per rack. Helps assess how much compute can be supported per square foot and whether cooling and power systems are adequate.

- Cooling system utilization (%): Tracks the load on cooling infrastructure relative to its total capacity, highlighting potential overheating risks or overprovisioned systems.

- Floor space utilization (%): Reflects how much physical space is occupied versus available. Supports planning for expansion or consolidation.

- Network port utilization (%): Monitors use of available switch/router ports to identify bottlenecks or underutilized network assets.

- Storage utilization (%): Shows used versus total storage capacity across tiers. Indicates when additional storage is needed and helps avoid overprovisioning.

- Compute resource utilization (CPU/RAM %): Aggregates usage levels across servers to identify idle capacity or overcommitted resources.

- Redundancy compliance (N/N+1/2N adherence): Verifies whether systems are operating within their intended redundancy models to ensure fault tolerance.

- Latency and throughput metrics: Measures network and storage responsiveness under load to detect emerging performance constraints.

- Capacity forecast accuracy: Compares forecasted versus actual usage over time, helping refine prediction models and planning processes.

Related content: Read our guide to data center migration service

Data Center Capacity Planning Methodologies

Baseline Analysis

Baseline analysis is the process of collecting data on the current state of all key resources. This means documenting actual usage for power, cooling, compute, storage, and network, as well as identifying idle or underutilized assets. Establishing an accurate baseline allows organizations to understand their existing operational envelope and supports effective comparison against projected trends and peak loads.

By using a reliable baseline, planners can identify over-provisioned areas that could be reclaimed and forecast when and where bottlenecks are likely to arise. Continuous updates to the baseline are necessary as incremental changes accumulate (such as new hardware deployments or increased business demand).

Trend Forecasting and Analytics

Trend forecasting combines historical resource utilization data with analytical models to predict future demand and usage patterns. This method leverages time-series analysis, seasonality, and business growth inputs to anticipate when system upgrades or expansions will be necessary.

Varied workloads, cyclical business activities, and technology refresh cycles all require consideration in these projections, which can be visualized through dashboards for stakeholders. Advanced analytics support the identification of slow-moving and abrupt demand shifts, allowing data centers to plan resource acquisition and deployment in advance.

Workload Modeling and Simulation

Workload modeling involves the creation of synthetic or emulated traffic profiles to understand how planned changes affect the data center environment. Through simulation, planners can observe the impact on power, cooling, network throughput, and storage utilization under various scenarios, such as growth in cloud workloads or the introduction of higher-density servers.

Modeling enables the validation of design assumptions before committing to hardware or layout investments. Simulation tools allow teams to test multiple what-if scenarios, evaluating resilience against planned and unplanned events, and uncovering hidden dependencies in operational workflows. This reduces risk, shortens deployment timelines, and ensures planned capacity expansions achieve their intended benefits.

Demand Forecasting

Demand forecasting merges business planning with resource planning by translating expected application launches, user growth, or market expansion into concrete infrastructure needs. This methodology works best when business and IT teams collaborate closely, ensuring that new business initiatives or product offerings are reflected in the demand models.

The practice requires frequent updates as business strategies evolve or new projects are announced. Reliable demand forecasting includes scenario-based stress testing, allowing organizations to experiment with best-case and worst-case growth patterns. This informs procurement strategies, budget allocations, and lead times for acquiring new infrastructure.

Best Practices for High-Accuracy Capacity Planning

Here are some of the ways that organizations can better plan their data center capacity.

1. Build and Maintain a Comprehensive, Accurate Inventory

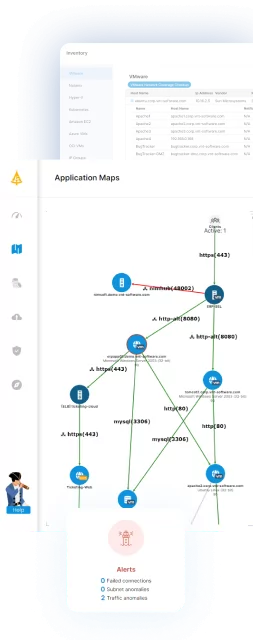

Accurate capacity planning relies on knowing exactly what assets are deployed and in use within the data center. This includes not just servers and storage, but also cables, PDUs, sensors, and supporting infrastructure. Regular inventory audits, physical verification, and asset lifecycle management tools help keep records current.

Integrating inventory with capacity management platforms ensures that allocations reflect reality and that underutilized resources can be identified and repurposed. Maintaining an updated inventory also enables more effective troubleshooting and change management. When planners know the precise models, firmware levels, and dependencies for each asset, they can accurately model the impact of new deployments, retirements, or upgrades.

2. Establish Early and Consistent Capacity Control Checkpoints

Capacity checkpoints are scheduled reviews within a project or operational cycle, where current usage, headroom, and growth trends are formally evaluated. Setting these checkpoints early ensures that capacity is considered from the start, not just as an afterthought when problems arise. Consistent checkpoints enable teams to spot gaps or oversights before they cause service interruptions or costly retrofits.

Checkpoint frequency should match the pace of change within the organization, a fast-growing environment may require monthly reviews, while others may manage with quarterly cycles. Each checkpoint involves stakeholders from IT, facilities, and business units to provide a 360-degree perspective on evolving capacity needs.

3. Use Automated Monitoring and Telemetry-Driven Insights

Automated monitoring replaces manual data collection with real-time or near-real-time telemetry from servers, infrastructure, and environmental sensors. This generates accurate, granular data on resource utilization, environmental conditions, and the health of power and cooling systems. Automated alerting enables teams to spot anomalies and potential capacity issues before they can impact operations.

Integrating telemetry with analytics tools allows for trend identification, predictive maintenance, and the validation of capacity models against live data. It also supports compliance and audit readiness by providing historical evidence of resource utilization. Automated monitoring significantly improves the accuracy and responsiveness of planning processes.

4. Integrate Cross-Team Communication to Prevent Planning Silos

Data center capacity is influenced by multiple teams including IT, facilities, network, storage, and business operations. Lack of coordination leads to planning silos, resulting in underutilized resources, excessive contingency buffering, or missed opportunities for shared efficiencies. Encouraging frequent cross-team communication breaks down these barriers, aligning technical capacity planning with business objectives and operations.

Establishing regular meetings, shared documentation platforms, and common planning frameworks unifies decision-making. When all teams contribute to forecasting, scenario analysis, and issue escalation, the organization becomes more agile. This integration also reduces the time needed to respond to resource constraints.

5. Leverage Automation, Modelling, and Scenario Simulation

Automation simplifies repetitive capacity planning tasks, from gathering telemetry to running usage reports or provisioning additional resources. By automating these steps, organizations reduce human error, accelerate feedback loops, and free up staff for higher-value analysis and design work. Automation is especially vital for responding quickly to dynamic workloads or sudden business changes.

Scenario modeling and simulation tools enable planners to test the impact of new deployments, infrastructure migrations, or changing application profiles before making capital investments. These tools help validate assumptions, optimize configurations, and avoid unanticipated constraints. Integrating automation with modeling capabilities results in a more nimble, error-resistant capacity planning process.