What Is AI Threat Detection?

AI threat detection refers to the use of artificial intelligence technologies, such as machine learning, neural networks, and analytics, to identify suspicious behavior, vulnerabilities, or malicious activities in information systems.

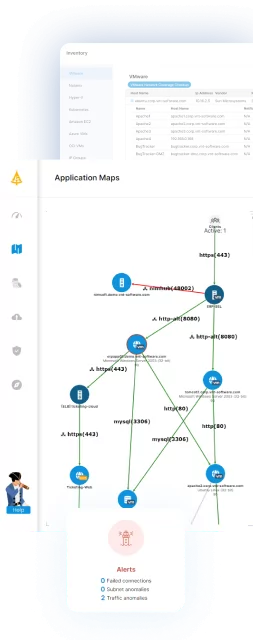

Unlike traditional signature-based methods, which rely on predefined patterns, AI-based systems can recognize novel or previously unknown threats by analyzing large volumes of data for deviations from the norm. The process involves continuous monitoring and analysis of system logs, network traffic, or user activities.

AI models are trained to differentiate between normal and abnormal behaviors, enabling security solutions to react swiftly to potential attacks. As cyber threats evolve and become more complex, AI threat detection provides a dynamic and adaptive approach, detecting both known and emerging risks in real time.

Table of Contents

ToggleWhy Is AI Integral to Modern Threat Detection?

Traditional methods often struggle to keep up with the increasing volume and sophistication of cyberattacks, especially as attackers develop new strategies to evade detection. AI systems, however, learn and adapt in real time, providing continuous monitoring and automatic detection of emerging threats.

Incorporating AI into threat detection provides the capacity to analyze large datasets at high speeds, identifying patterns and anomalies that might go unnoticed by human analysts or traditional tools. This allows AI systems to detect not only known threats but also new, previously unseen attacks.

For example, AI models can quickly recognize new forms of malware, phishing attempts, or zero-day exploits by observing unusual behavior or system activity. AI also improves the accuracy and speed of incident response. By automating threat detection and initiating countermeasures, AI systems reduce the time it takes to identify and neutralize attacks.

Core Concepts and Techniques of AI in Threat Detection

Supervised Learning

Supervised learning is a foundational AI approach in threat detection that leverages labeled datasets to train models. The model is presented with examples where each input is matched to a known outcome, such as identifying network traffic as malicious or benign. Security teams use historical attack data to develop models that recognize similar attacks in real environments.

The quality and breadth of labeled data are crucial for supervised learning’s success. When done well, it enables rapid, high-confidence detection of recurring threats. However, because this approach depends on past knowledge, it may struggle with truly novel or previously unseen attacks, highlighting the need for complementary techniques.

Unsupervised Learning

Unsupervised learning operates without pre-labeled data, making it suitable for environments where new threats constantly emerge. These algorithms automatically uncover patterns, clusters, or anomalies within vast security datasets by analyzing underlying structures. For security, this means identifying suspicious behaviors that don’t match any prior signature or rule.

By focusing on deviations from established baselines, unsupervised learning can surface hidden or subtle threats, including sophisticated, slow-moving attacks. However, this approach can also generate more false positives compared to supervised methods, requiring iterative refinement and integration with analyst expertise for effective real-world results.

Reinforcement Learning

Reinforcement learning introduces adaptive, self-improving models into threat detection systems. These models make decisions in a live environment and receive feedback based on outcomes, such as correctly blocked threats or successful detections. Over time, the system optimizes its responses to changing threat patterns to achieve more accurate threat mitigation.

This technique supports automated responses to incidents and is well-suited for dynamic environments where threats morph quickly. While reinforcement learning can lead to highly effective and resilient systems, its implementation is complex and typically requires feedback mechanisms and continuous monitoring to ensure safe, reliable outcomes.

Anomaly Detection

Anomaly detection is central to AI threat detection. It involves establishing a model of what is considered “normal” behavior for systems, users, or devices and flagging any deviation as anomalous. These anomalies may represent unauthorized access, data exfiltration, or malware activity that standard signature-based methods miss.

AI-driven anomaly detection excels at finding these subtle deviations by processing large datasets in real time and learning baseline behaviors. However, it can lead to alert fatigue if not carefully tuned, as not all anomalies indicate real threats. Balancing sensitivity and specificity is key to minimizing false positives while ensuring no true threats escape notice.

Predictive Analytics

Predictive analytics applies statistical models and machine learning to forecast potential threats before they manifest. By analyzing patterns from historical incident data, user behaviors, and environmental signals, predictive models anticipate likely attack scenarios and high-risk periods or assets. This allows security teams to prioritize defenses and resources effectively.

When integrated with automated preventive controls, predictive analytics enables proactive defenses that can thwart attacks at early stages. However, predictions rely on the quality and relevance of input data. Continuous validation and adjustment of models are necessary to ensure ongoing accuracy in response to evolving attack methodologies.

Lanir specializes in founding new tech companies for Enterprise Software: Assemble and nurture a great team, Early stage funding to growth late stage, One design partner to hundreds of enterprise customers, MVP to Enterprise grade product, Low level kernel engineering to AI/ML and BigData, One advisory board to a long list of shareholders and board members of the worlds largest VCs

Tips from the Expert

In my experience, here are tips that can help you better design and operationalize AI threat detection systems:

- Fuse AI detection with behavioral baselining per asset: Instead of relying on global “normal behavior” models, create asset-specific baselines (e.g., per server, user role, or API). This granularity dramatically reduces false positives in diverse environments.

- Build an AI threat-hunting loop with analyst feedback: Establish a closed loop where human analysts can flag false positives or label overlooked anomalies, feeding this data back into the AI model for reinforcement and refinement.

- Leverage federated learning for distributed environments: In multi-cloud or hybrid environments, use federated learning to train AI models across decentralized data sources without moving sensitive data to a central location, reducing data exposure risk.

- Incorporate adversarial AI testing into model validation: Actively test detection models against adversarial attacks (e.g., data poisoning, evasion tactics) to harden them against attackers who specifically target AI systems.

- Deploy lightweight edge AI for IoT and OT environments: Push anomaly detection capabilities closer to endpoints like IoT devices or industrial systems to detect threats in constrained environments with minimal latency.

What Is AI Threat Detection?

AI threat detection refers to the use of artificial intelligence technologies, such as machine learning, neural networks, and analytics, to identify suspicious behavior, vulnerabilities, or malicious activities in information systems.

Unlike traditional signature-based methods, which rely on predefined patterns, AI-based systems can recognize novel or previously unknown threats by analyzing large volumes of data for deviations from the norm. The process involves continuous monitoring and analysis of system logs, network traffic, or user activities.

AI models are trained to differentiate between normal and abnormal behaviors, enabling security solutions to react swiftly to potential attacks. As cyber threats evolve and become more complex, AI threat detection provides a dynamic and adaptive approach, detecting both known and emerging risks in real time.

Why Is AI Integral to Modern Threat Detection?

Traditional methods often struggle to keep up with the increasing volume and sophistication of cyberattacks, especially as attackers develop new strategies to evade detection. AI systems, however, learn and adapt in real time, providing continuous monitoring and automatic detection of emerging threats.

Incorporating AI into threat detection provides the capacity to analyze large datasets at high speeds, identifying patterns and anomalies that might go unnoticed by human analysts or traditional tools. This allows AI systems to detect not only known threats but also new, previously unseen attacks.

For example, AI models can quickly recognize new forms of malware, phishing attempts, or zero-day exploits by observing unusual behavior or system activity. AI also improves the accuracy and speed of incident response. By automating threat detection and initiating countermeasures, AI systems reduce the time it takes to identify and neutralize attacks.

Core Concepts and Techniques of AI in Threat Detection

Supervised Learning

Supervised learning is a foundational AI approach in threat detection that leverages labeled datasets to train models. The model is presented with examples where each input is matched to a known outcome, such as identifying network traffic as malicious or benign. Security teams use historical attack data to develop models that recognize similar attacks in real environments.

The quality and breadth of labeled data are crucial for supervised learning’s success. When done well, it enables rapid, high-confidence detection of recurring threats. However, because this approach depends on past knowledge, it may struggle with truly novel or previously unseen attacks, highlighting the need for complementary techniques.

Unsupervised Learning

Unsupervised learning operates without pre-labeled data, making it suitable for environments where new threats constantly emerge. These algorithms automatically uncover patterns, clusters, or anomalies within vast security datasets by analyzing underlying structures. For security, this means identifying suspicious behaviors that don’t match any prior signature or rule.

By focusing on deviations from established baselines, unsupervised learning can surface hidden or subtle threats, including sophisticated, slow-moving attacks. However, this approach can also generate more false positives compared to supervised methods, requiring iterative refinement and integration with analyst expertise for effective real-world results.

Reinforcement Learning

Reinforcement learning introduces adaptive, self-improving models into threat detection systems. These models make decisions in a live environment and receive feedback based on outcomes, such as correctly blocked threats or successful detections. Over time, the system optimizes its responses to changing threat patterns to achieve more accurate threat mitigation.

This technique supports automated responses to incidents and is well-suited for dynamic environments where threats morph quickly. While reinforcement learning can lead to highly effective and resilient systems, its implementation is complex and typically requires feedback mechanisms and continuous monitoring to ensure safe, reliable outcomes.

Anomaly Detection

Anomaly detection is central to AI threat detection. It involves establishing a model of what is considered “normal” behavior for systems, users, or devices and flagging any deviation as anomalous. These anomalies may represent unauthorized access, data exfiltration, or malware activity that standard signature-based methods miss.

AI-driven anomaly detection excels at finding these subtle deviations by processing large datasets in real time and learning baseline behaviors. However, it can lead to alert fatigue if not carefully tuned, as not all anomalies indicate real threats. Balancing sensitivity and specificity is key to minimizing false positives while ensuring no true threats escape notice.

Predictive Analytics

Predictive analytics applies statistical models and machine learning to forecast potential threats before they manifest. By analyzing patterns from historical incident data, user behaviors, and environmental signals, predictive models anticipate likely attack scenarios and high-risk periods or assets. This allows security teams to prioritize defenses and resources effectively.

When integrated with automated preventive controls, predictive analytics enables proactive defenses that can thwart attacks at early stages. However, predictions rely on the quality and relevance of input data. Continuous validation and adjustment of models are necessary to ensure ongoing accuracy in response to evolving attack methodologies.