What Is AI in Cybersecurity?

AI in cybersecurity refers to the use of artificial intelligence technologies, such as machine learning and deep learning, to improve the speed and scale of cyber defense mechanisms. AI-based systems process vast amounts of data, recognize complex patterns, and identify potential threats with greater accuracy than traditional security tools. They can autonomously learn from new threats, adapt to emerging tactics, and respond to incidents.

The integration of AI technologies into cybersecurity platforms aims to provide organizations with better detection, prediction, and response capabilities across networks, endpoints, and cloud environments. This includes identifying unknown or zero-day attacks, reducing false positives, and automating routine security operations.

Table of Contents

Toggle- What Is AI in Cybersecurity?

- Traditional Cybersecurity vs. AI-Enhanced Cybersecurity

- 6 Core Applications and Examples of AI in Cybersecurity

- What Are the Benefits of AI in Cybersecurity?

- Tips from the Expert

- Challenges and Limitations of AI for Cybersecurity

- Best Practices for Successful AI Adoption for Cybersecurity

- AI in Cybersecurity with Faddom

This is part of a series about application dependency mapping

Traditional Cybersecurity vs. AI-Enhanced Cybersecurity

Traditional cybersecurity relies heavily on rule-based systems and signature-based detection methods to identify and respond to threats. These systems typically use predefined rules or signatures of known malware and attack patterns to detect potential security breaches. While effective against well-established threats, traditional methods struggle with new, unknown, or rapidly evolving attack strategies, leading to slower detection and response times.

AI-enhanced cybersecurity uses machine learning algorithms that can analyze large datasets in real time, identify patterns, and detect anomalies that might indicate a security threat. Unlike traditional systems, AI models do not require predefined rules or signatures. Instead, they continuously learn from new data, improving their ability to identify new threats. This enables AI-based systems to defend against zero-day attacks and adapt to changing attack vectors.

While traditional methods often require manual intervention and rely on human expertise for decision-making, AI-enhanced cybersecurity automates many processes, such as threat detection, analysis, and response. This increased automation helps security teams focus on more complex and critical tasks while allowing AI systems to handle repetitive tasks.

6 Core Applications and Examples of AI in Cybersecurity

1. Threat Detection and Prediction

AI excels at threat detection by correlating vast quantities of data from disparate sources and identifying subtle indicators of compromise. Machine learning models analyze patterns in historical attack data, real-time network traffic, and user activity to flag suspicious behavior and predict future incursions. By applying anomaly detection and predictive analytics, AI systems can issue early warnings and recommend countermeasures before attacks fully unfold.

AI-driven predictive models are continuously refined as new threat intelligence is ingested, enabling them to adapt to changing tactics used by adversaries. This dynamic capability is especially valuable in environments with constantly shifting threat landscapes, where static rules quickly become obsolete.

Learn more in our detailed guide to AI threat detection

2. Behavioral Analysis

Behavioral analysis enabled by AI involves scrutinizing user actions, device activities, and application processes to establish baseline norms. When significant deviations from these norms occur, the system triggers alerts or initiates automated responses. This approach is particularly effective for identifying insider threats, compromised accounts, and advanced malware that mimic legitimate behaviors while remaining undetected by traditional methods.

AI models used for behavioral analysis can distinguish between benign anomalies, such as unusual but authorized work schedules, and genuine threats, like unauthorized lateral movement within a network. The ability to learn and refine behavioral baselines reduces false positives and enables adaptive responses that evolve alongside organizational changes.

3. Incident Response Automation

Incident response automation involves using AI to accelerate the containment, investigation, and remediation phases following a security event. Automated playbooks, guided by AI decision engines, execute predefined actions such as isolating affected endpoints, blocking malicious IP addresses, or launching forensic analysis scripts. This swift automation can minimize damage, reduce manual intervention, and improve consistency in handling repetitive alerts.

AI-enhanced automation can prioritize incidents based on severity and potential business impact, ensuring that critical threats receive immediate attention from security analysts. By addressing low-level incidents autonomously, AI frees up human resources for complex investigations and strategic planning.

4. Phishing Detection and Prevention

AI is widely adopted for detecting and preventing phishing attacks, which are increasingly sophisticated and tailored to their targets. Natural language processing (NLP) algorithms analyze the content, metadata, and structure of emails or messages, identifying suspicious patterns and flagging malicious links or attachments.

In addition to email scanning, AI-based tools monitor user interactions and warning behaviors, such as clicking unverified links or entering credentials on unfamiliar sites. Real-time analysis allows organizations to block compromised communication channels and warn users immediately, reducing the likelihood of successful credential theft or malware execution.

5. Malware Detection and Analysis

Traditional antivirus solutions rely on signature databases, which cannot keep pace with the rapid creation and mutation of malware. AI-driven malware detection systems go beyond signatures by analyzing file attributes, code structure, and behavioral patterns in real-time. Machine learning models deconstruct unknown binaries to predict their malicious potential based on observed tactics and heuristics.

Automated malware analysis using AI also accelerates reverse-engineering, providing security teams with detailed insights into how new strains operate. When combined with sandboxing and behavioral analysis, AI solutions can promptly isolate, quarantine, and analyze suspicious files—preventing their spread within the organization.

6. Network Security and Anomaly Detection

AI-powered tools improve network security by continuously monitoring traffic, identifying unusual patterns, and correlating diverse data streams. These systems detect anomalies that might signify a breach, such as unexpected data transfers, command-and-control signals, or unauthorized access attempts.

Unlike rules-based intrusion detection systems, AI solutions evolve as network environments change, mitigating the risk of previously unseen attack methods. Using graph-based analytics and unsupervised learning, AI tools uncover lateral movement, privilege escalation, and other suspicious behaviors that might bypass manual scrutiny. The technology also aids in visualizing network topology, offering security teams an up-to-date map of their digital assets.

Related content: Read our guide to AI security tools

What Are the Benefits of AI in Cybersecurity?

The integration of AI into cybersecurity offers several distinct advantages that significantly improve threat defense and incident response capabilities. These benefits help organizations stay ahead of evolving cyber threats while improving operational efficiency:

- Enhanced threat detection: AI systems can analyze vast amounts of data to identify patterns and anomalies faster and more accurately than traditional tools, improving the chances of detecting both known and unknown threats.

- Faster response times: By automating routine tasks like threat detection and incident response, AI reduces the time it takes to address and mitigate potential security breaches.

- Predictive capabilities: AI’s ability to analyze past threats and behaviors allows it to predict and prevent future attacks.

- Reduced false positives: AI-powered systems continuously learn from new data, improving their accuracy and reducing the number of false alarms, which helps security teams focus on real threats.

- Scalability: AI technologies can scale to meet the needs of large organizations, providing security coverage across networks, endpoints, and cloud environments without the need for proportional increases in human resources.

- Improved efficiency: AI automates many manual and repetitive tasks, enabling security teams to focus on more complex issues, increasing overall productivity and effectiveness.

- Adaptability: AI systems can quickly adapt to new attack methods, ensuring that cybersecurity strategies remain effective even as threats evolve.

Lanir specializes in founding new tech companies for Enterprise Software: Assemble and nurture a great team, Early stage funding to growth late stage, One design partner to hundreds of enterprise customers, MVP to Enterprise grade product, Low level kernel engineering to AI/ML and BigData, One advisory board to a long list of shareholders and board members of the worlds largest VCs

Tips from the Expert

In my experience, here are tips that can help you better design, deploy, and manage AI for cybersecurity:

- Design AI models with adversarial resilience: Incorporate defenses against adversarial machine learning techniques (e.g., evasion attacks, model inversion) to prevent attackers from exploiting weaknesses in AI models.

- Integrate AI with zero trust architecture: Embed AI into zero trust frameworks to continuously verify users, devices, and applications in real time, detecting lateral movement and micro-segmenting access dynamically.

- Deploy AI for supply chain and third-party risk monitoring: Extend AI-powered anomaly detection to monitor vendor APIs, software updates, and supply chain interactions to catch risks like SolarWinds-style attacks early.

- Use multi-layered AI ensembles for richer insights: Combine supervised, unsupervised, and reinforcement learning in layered ensembles. This hybrid approach improves detection accuracy and adapts better to novel attack vectors.

- Leverage AI for automated deception campaigns: Enable AI to create dynamic honeypots, decoy data, and fake credentials that lure attackers, collect TTPs, and feed intelligence back into defense systems.

Challenges and Limitations of AI for Cybersecurity

There are several factors that can impact the effectiveness of AI in cybersecurity practices.

Data Privacy Concerns

Integrating AI into cybersecurity operations raises significant data privacy concerns, especially when systems require access to sensitive log files, user communications, or device metadata. Collecting, storing, and processing this data can lead to regulatory violations and increased exposure if not protected properly. AI tools must operate within the scope of privacy laws and industry standards to maintain compliance and avoid legal penalties.

Additionally, training AI models often involves extensive datasets that could include personally identifiable information (PII). Mishandling this data can result in unauthorized disclosure and undermine trust in both AI and security operations.

Bias in AI Models

AI models are susceptible to bias if trained on imperfect or unrepresentative datasets. In cybersecurity, this can result in inaccurate threat assessments, overlooked attack vectors, or disproportionate scrutiny of certain user groups. Such biases undermine the accuracy and fairness of AI-driven systems, increasing the likelihood of false alarms and missed intrusions.

Addressing bias requires ongoing evaluation of training data sources, model selection, and performance metrics. Security teams must regularly audit AI outputs, retrain models using diverse and current datasets, and involve multidisciplinary experts in the validation process.

Resource Intensive

Deploying AI in cybersecurity can be resource-intensive, requiring significant investment in hardware, software, and personnel. Training, updating, and maintaining machine learning models require high computational power and storage capacity. This can become a barrier for smaller organizations lacking the necessary technical expertise or budget to support enterprise-scale AI solutions.

Continuous model validation, retraining, and integration with existing security infrastructure impose ongoing operational costs. Security teams must balance the benefits of AI adoption against the expense and complexity it introduces.

Best Practices for Successful AI Adoption for Cybersecurity

Here are some of the ways that organizations can ensure the better use of within their cybersecurity strategy.

1. Ensure Data Quality and Integrity

Successful AI implementation hinges on the quality and integrity of input data. Poor-quality data—laden with errors, redundancies, or irrelevant signals—undermines the accuracy of AI-driven threat detection and response.

Security teams must establish data governance policies that prioritize ongoing collection, cleaning, normalization, and validation of security-related data sources. Periodic audits are essential to maintain both historical and real-time datasets.

High-integrity datasets not only improve model performance but also support more reliable threat predictions and faster incident identification. To ensure reliable data pipelines amidst changing environments, organizations should automate data validation processes and implement monitoring systems that flag anomalies in data collection or changes in data quality.

2. Maintain Human Oversight and Expertise

Despite AI’s sophistication, human oversight remains crucial in every stage of the cybersecurity lifecycle. Human experts validate model outputs, interpret complex threat scenarios, and refine response strategies that AI platforms may not fully grasp.

Maintaining a mix of automated analysis and skilled personnel ensures that AI-driven recommendations align with business context and compliance requirements. Continuous training and upskilling help security teams to work effectively alongside AI systems, fostering trust and minimizing the likelihood of critical errors.

Human intervention is necessary when AI models encounter edge cases, ambiguous data, or ethical dilemmas. Integrating human judgment with automated insights maximizes detection accuracy while avoiding overdependence on black-box algorithms.

3. Ensure Model Explainability

Model explainability is vital for gaining stakeholder trust, meeting regulatory requirements, and enabling incident investigations. Security teams must select and optimize AI models that provide interpretability—allowing analysts to understand why a given alert or decision was made.

Techniques such as feature attribution and visualization help translate complex predictions into actionable intelligence. Explainability also supports iterative improvement of AI solutions. By understanding the strengths and limitations of model reasoning, organizations can better tune detection thresholds, features, and response workflows.

Transparent AI operations reduce risks associated with hidden biases, erroneous outputs, or compliance gaps. This supports greater accountability and rapid troubleshooting in dynamic security environments.

4. Monitor AI System Performance

Continuous monitoring of AI system performance ensures sustained accuracy and relevance in the face of evolving threats. Security teams should track metrics such as detection rates, false positives, response times, and model drift to promptly identify degradation or operational issues. Real-time dashboards and automated alerts enable proactive tuning and remediation.

Routine model evaluation should also include stress tests, adversarial simulations, and validation against fresh threat intelligence sources. These processes ensure AI platforms remain adaptable and aligned with organizational defense priorities. Comprehensive performance monitoring, combined with iterative retraining and stakeholder feedback, helps maintain the efficacy and resilience of AI-enhanced cybersecurity solutions.

5. Comply with Legal and Regulatory Standards

Legal and regulatory compliance is foundational to AI deployment in cybersecurity. Organizations must ensure that their AI-driven tools meet requirements around data privacy, access controls, auditability, and transparency—especially under frameworks like GDPR, HIPAA, or industry-specific regulations. Failure to comply can result in fines, reputational damage, and operational disruptions.

Security teams should document AI system processes, maintain audit logs, and implement controls to prevent unauthorized access to sensitive information. Legal counsel and compliance professionals should collaborate with security and IT teams during implementation and ongoing use of AI solutions.

AI in Cybersecurity with Faddom

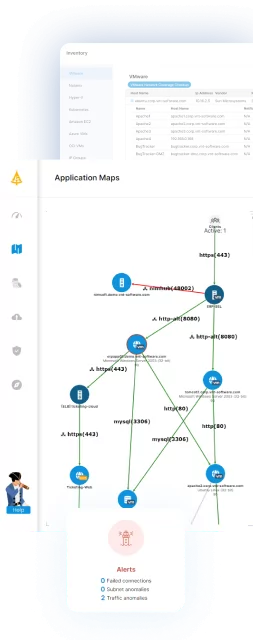

As AI plays a crucial role in modern cyber defense, Faddom enables organizations to strengthen their visibility and control by mapping all application dependencies in real time across cloud, on-premises, and hybrid environments.

Faddom’s Lighthouse AI introduces intelligent traffic anomaly detection. By automatically identifying unusual east-west traffic and shadow IT behavior, Lighthouse AI empowers security teams to detect threats early, reduces the time required for manual investigations, and allows for more precise responses.

Book a demo to discover how Faddom and Lighthouse AI can strengthen your cybersecurity strategy!