What Is AI Intrusion Detection?

AI intrusion detection refers to the use of artificial intelligence technologies to identify unauthorized access or malicious activity within a network or system. Unlike traditional intrusion detection systems, AI-based solutions leverage machine learning and deep learning to analyze vast amounts of data in real time, identifying anomalous patterns that could indicate a breach.

These systems can learn from historical attack patterns, adapting to emerging threats without requiring constant manual updates, making them more efficient and scalable. The main advantage of AI intrusion detection is its ability to detect zero-day attacks by identifying novel patterns that don’t match predefined signatures.

It achieves this through statistical models and pattern recognition techniques. Additionally, AI integrates with broader security frameworks, enabling automated responses to prevent damage.

Table of Contents

Toggle- What Is AI Intrusion Detection?

- Evolution of Intrusion Detection and Security Threats

- Core Components of AI Intrusion Detection Systems

- Tips from the Expert

- Machine Learning Techniques in AI Intrusion Detection

- AI Intrusion Detection Challenges

- 5 Best Practices for Deploying AI Intrusion Detection

- AI Intrusion Detection with Faddom

Evolution of Intrusion Detection and Security Threats

Intrusion detection systems (IDS) have evolved significantly since their inception in the 1980s. Early systems relied on static rule sets and signature-based methods, where known attack patterns were manually encoded. These systems were effective against previously seen threats but failed to detect novel attacks or sophisticated evasion techniques.

In the 2000s, anomaly-based detection emerged, introducing statistical methods to model normal behavior and identify deviations. This allowed detection of previously unknown threats but also increased false positives, as normal network behavior could vary significantly over time and across environments.

As cyber threats grew more advanced—such as polymorphic malware, lateral movement, and encrypted command-and-control traffic—the limitations of static and rule-based systems became more pronounced. Attackers began using stealthy and adaptive techniques that blended with legitimate activity, making traditional IDS less reliable.

The advent of AI brought a shift toward behavioral and predictive models. Machine learning algorithms started to analyze traffic patterns, user behavior, and system logs at scale. These models could continuously learn and adjust to evolving threats, reducing the need for manual signature updates and enabling detection of complex attack chains in real-time.

Modern AI-powered IDS solutions now integrate with broader security information and event management (SIEM) systems, allowing for context-aware detection and automated threat responses.

Related content: Read our guide to AI threat detection

Core Components of AI Intrusion Detection Systems

Data Collection

Data collection is the first step in any AI intrusion detection system, where vast amounts of network and system logs are continuously gathered from multiple endpoints. These logs contain vital information such as login attempts, file access records, and network traffic. Real-time and historical data help establish a baseline of normal activity for comparison.

The quality and diversity of this data are critical for effective detection. This phase also involves preprocessing the raw data to filter out noise, irrelevant information, and redundancies. Clean and structured data ensure accurate model training and minimize the risk of errors.

Model Training

Model training is where machine learning models learn to differentiate between normal and abnormal behaviors within a network. Using high-quality labeled datasets, these models are taught to recognize various patterns, such as typical user behavior or known attack vectors. Supervised, unsupervised, and reinforcement learning determine the training approach used.

The training process must account for dynamic environments, regularly retraining models to stay updated with evolving threats. It often uses techniques like cross-validation to ensure reliability and to prevent overfitting. A well-trained model is capable of spotting novel attacks while minimizing false positives.

Detection Mechanism

The detection mechanism is responsible for evaluating live data and identifying potential intrusions. AI systems analyze incoming data streams for discrepancies compared to established baselines and patterns learned during training. Techniques like anomaly detection, statistical modeling, and clustering algorithms work together to flag suspicious activities.

The mechanism often includes thresholds to trigger alerts only for significant deviations, reducing noise. Sophisticated detection systems integrate multi-layered analyses, incorporating behavioral insights and rule-based assessments. This hybrid approach combines the speed of automated detection with the accuracy of context-driven analysis.

Lanir specializes in founding new tech companies for Enterprise Software: Assemble and nurture a great team, Early stage funding to growth late stage, One design partner to hundreds of enterprise customers, MVP to Enterprise grade product, Low level kernel engineering to AI/ML and BigData, One advisory board to a long list of shareholders and board members of the worlds largest VCs

Tips from the Expert

In my experience, here are tips that can help you better implement and manage AI intrusion detection systems:

- Deploy deception technologies to augment detection: Use honeypots and honeynets integrated with AI detection systems. These deceptive environments can trigger early alerts and enrich training data with attacker behavior not typically seen in production systems.

- Use federated learning for cross-organization threat insights: In environments with privacy constraints, federated learning enables organizations to collaboratively train AI models on decentralized data without sharing raw logs, enhancing detection coverage across sectors.

- Integrate graph-based anomaly detection: Many advanced attacks follow complex paths across systems. Graph-based AI models can map entity relationships and uncover subtle, multi-hop intrusion patterns not evident in isolated log analyses.

- Apply differential privacy in model training: Protect sensitive user data by incorporating differential privacy techniques during training, which ensures AI models learn general patterns without exposing specific user activities.

- Benchmark model drift with synthetic attack generation: Regularly generate synthetic intrusion scenarios using tools like MockingBird or Adversarial Robustness Toolbox to assess model drift and retraining needs without relying solely on real breach data.

Machine Learning Techniques in AI Intrusion Detection

Traditional ML Algorithms

AI intrusion detection systems use a variety of machine learning algorithms to detect malicious activity. Supervised learning algorithms, such as decision trees, support vector machines (SVM), and logistic regression, are commonly used when labeled datasets of normal and attack behaviors are available. These models learn patterns that distinguish benign from malicious activity and are effective when high-quality training data is present.

Unsupervised learning algorithms, like k-means clustering, DBSCAN, and principal component analysis (PCA), are useful when labeled data is scarce. These algorithms model normal behavior and flag deviations as anomalies, making them suitable for detecting previously unknown threats. Semi-supervised techniques combine both approaches, using a small amount of labeled data with a larger unlabeled dataset to improve accuracy.

Deep Learning Models

Deep learning models provide capabilities for detecting complex and subtle patterns in high-dimensional data. Convolutional neural networks (CNNs) can be used for analyzing structured network traffic data, while recurrent neural networks (RNNs) and their variant long short-term memory (LSTM) networks are well-suited for sequential data, such as time-series logs and user activity traces.

Autoencoders, a type of neural network designed for anomaly detection, learn to reconstruct normal input data and measure reconstruction errors to detect anomalies. These are particularly useful for unsupervised intrusion detection.

Deep learning models require significant computational resources and large datasets, but they excel at capturing non-linear relationships and detecting sophisticated attack behaviors that traditional models might miss.

Ensemble Methods

Ensemble methods improve detection accuracy by combining the outputs of multiple models. Techniques such as bagging (e.g., random forests) and boosting (e.g., XGBoost) aggregate the predictions of several base learners to reduce variance and bias.

In AI intrusion detection, ensemble models are used to balance the strengths and weaknesses of different algorithms. For example, combining a supervised model for known threats with an unsupervised model for novel anomalies can offer broader coverage and reduce false positives.

Stacking, another ensemble approach, uses a meta-model to learn how best to combine predictions from various base models. This layered technique often results in higher performance and more reliable detection in diverse network environments.

AI Intrusion Detection Challenges

Here are some of the main challenges associated with identifying intrusions using AI.

Data Quality

The accuracy of AI intrusion detection systems heavily depends on the quality of input data. Inconsistent, incomplete, or noisy data can lead to flawed model training and unreliable detection. Issues such as mislabeled data, missing logs, or inconsistent formatting can introduce bias and degrade system performance.

Adversarial Attacks

AI models are vulnerable to adversarial attacks—carefully crafted inputs designed to mislead detection systems. Attackers may slightly modify traffic patterns or payloads to appear benign, bypassing anomaly detectors and signature models. These tactics exploit the blind spots in trained models, especially those not hardened against such inputs.

False Positives/Negatives

A major challenge in AI-based intrusion detection is balancing false positives (legitimate behavior flagged as malicious) and false negatives (missed actual threats). High false positive rates can overwhelm security teams and erode trust in the system. False negatives, on the other hand, leave networks exposed to undetected breaches.

5 Best Practices for Deploying AI Intrusion Detection

Organizations should keep the following practices in mind to ensure effective detection of intrusion threats with AI.

1. Leveraging Threat Intelligence Feeds

Incorporating threat intelligence feeds enables AI systems to detect known threats faster and provides context to improve detection accuracy. These feeds supply continuously updated information on IP addresses, domains, file hashes, and behavioral indicators associated with active threat actors and campaigns.

AI intrusion detection systems can use this intelligence to enrich alert metadata, prioritize high-risk threats, and generate more informed response actions. For instance, if an anomalous login attempt matches a blacklisted IP from a threat feed, the system can escalate the incident severity and trigger automated containment.

To maximize effectiveness, organizations should integrate multiple reputable threat intelligence sources and correlate findings across feeds. Using STIX/TAXII protocols enables automated and standardized ingestion. Feeds should be filtered and validated to avoid alert fatigue from low-confidence or irrelevant indicators.

2. Ensuring End-to-End Data Encryption

Data encryption is vital in preserving the confidentiality and integrity of logs, telemetry, and model outputs across the AI intrusion detection pipeline. Encryption ensures that sensitive data—including user activities, system logs, and detection results—cannot be intercepted or manipulated during transit or at rest.

Implementing transport layer security (TLS) for data in motion is essential for all network communications, especially between agents, collectors, and central processing nodes. For data at rest, using AES-256 or similar strong encryption standards protects stored logs and model artifacts. Access to decryption keys should be tightly controlled using hardware security modules (HSMs) or secure key management services.

Integrating hashing and digital signatures helps detect tampering and verify the authenticity of received data. AI systems should be designed to discard or flag any data that fails integrity checks. Auditable encryption practices also support compliance with regulations like GDPR, HIPAA, and CCPA.

3. Comprehensive Testing and Simulations

Testing is critical to validate the effectiveness and resilience of AI intrusion detection systems. This includes functional testing, performance benchmarking, and adversarial testing to ensure the system behaves correctly under diverse threat scenarios.

Organizations should use a mix of synthetic datasets, replayed network traffic, and live red team exercises to simulate real-world conditions. Simulations should cover a wide range of threats—such as brute force attempts, insider threats, malware infections, and lateral movement—to test the system’s sensitivity and accuracy. Tools like Metasploit, Caldera, or custom testbeds can enable these tests.

Testing should also evaluate system behavior under load, ensuring it scales with network traffic and does not introduce unacceptable latency. Results from simulations can inform model tuning, alert threshold adjustments, and incident response workflows. Regular, structured testing cycles, paired with post-mortem reviews, help refine detection logic.

4. Establishing Multilayered Defense Strategies

A single AI-based intrusion detection system cannot provide comprehensive protection on its own. Effective cybersecurity requires a defense-in-depth strategy, where multiple, complementary security layers work together to detect, delay, and contain threats.

AI systems should be integrated with endpoint detection and response (EDR), network segmentation, firewalls, email security gateways, and user access control systems. For example, if AI detects unusual user behavior, access policies can dynamically adjust to enforce multi-factor authentication or restrict access to sensitive resources.

Using orchestration tools like SOAR (Security Orchestration, Automation, and Response), alerts from AI systems can automatically trigger workflows such as isolating compromised machines, blocking IP addresses, or notifying security teams. Cross-layer correlation allows detection of stealthy threats that span multiple domains.

AI Intrusion Detection with Faddom

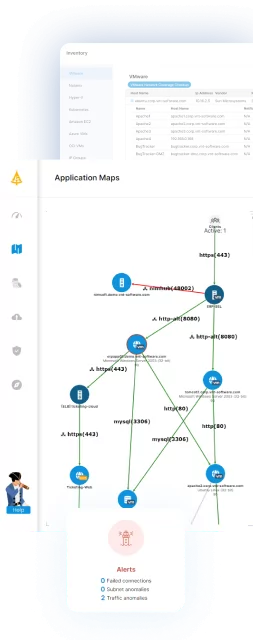

As attackers become more stealthy and unpredictable, intrusion detection requires more than static signatures or isolated alerts. Faddom provides real-time visibility and AI-driven threat detection with application dependency mapping, offering a comprehensive view of your hybrid infrastructure.

Its anomaly detection engine tracks all server communications, learning what is normal in your environment and flagging suspicious behavior, such as lateral movement, port scans, or DNS anomalies, without requiring manual rules. This results in faster detection, fewer false positives, and a better understanding of your network’s vulnerabilities before they can be exploited.

If you want to see how AI intrusion detection looks inside your infrastructure, book a personalized demo with one of our Faddom experts.